cas/definition/feature.php (core concept)

Rules

Complex systems are composed of agents governed by simple input/output rules that determine their behaviors.

One of the intriguing characteristics of complex systems is that highly sophisticated emergent phenomena can be generated by seemingly simple agents. These agents follow very simple rules - with dramatic results.

Simple Rules - Complex Outcomes

Slime mold forming the Tokyo subway map

Take it in Context

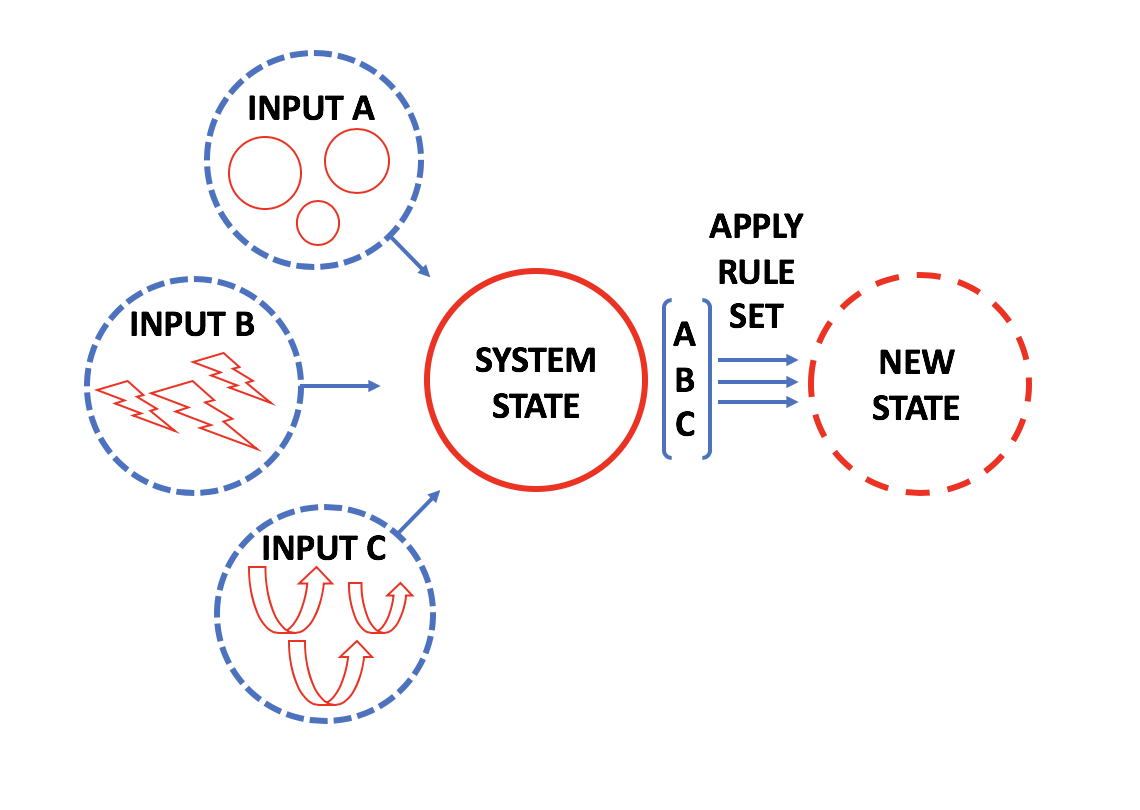

We can conceptualize bottom-up agents as simple entities with limited action possibilities. The decision of which action possibility to deploy is regulated by basic rules that pertain to the context in which the agents find themselves. Another way to think of 'rules' is therefore to relate them to the idea of a simple set of input/output criteria.

An agent exists within a particular context that contains a series of factors considered as relevant inputs: one input might pertain to the agent's previous state (moving left or right); one might pertain to some differential in the agent's context (more or less light; and one might relate to the state of surrounding agents (greater or fewer). An agent processes these inputs and, according to a particular rule set, generates an output: 'stay the course', 'shift left', 'back up'.

input/output rule factoring three variables

In complex adaptive systems, an aspect of this 'context' must include the output behaviors generated by surrounding agents. Further, while for natural systems the agent's context might include all kinds of factors that serve as relevant inputs, in artificial complex systems novel emergent behavior can manifest even if the only thing informing the context is surrounding agent behaviors.

Example:

Early complexity models focused precisely on the generative capacity of simple rules within a context composed purely of other agents. For example, John Conway's 'Game of Life' is a prime example of how a very basic rule set can generate a host of complex phenomena. Starting from agents arranged on a cellular grid, with fixed rules of being either 'on' or 'off' depending on the status of the agents in neighboring cells, we see the generation of a host of rich forms. The game unfolds using only four rules, that govern whether an agent is 'on' (alive) or 'off' (dead). For every iteration:

- 'Off' cells turn 'On' IF they have three 'alive' neighbors;

- 'On' cells stay 'On' IF they have two or three 'alive' neighbors;

- 'On' cells turn 'Off' IF they have one or fewer 'alive' neighbors;

- 'On' cells turn 'Off' IF they have four or more 'alive' neighbors.

The resulting behavior has an 'alive' quality: agents flash on and off over multiple iterations, seem to converge, move along the grid, swallow other forms, duplicate, and reproduce.

Conway's Game of Life

Principle: One agent's output is another agent's input!

As we can see from the Game of Life, starting with very basic agents, who rely only on other agents outputs as their input, a basic rule set can nonetheless generate extremely rich outputs.

While the Game of Life is an artificial complex system (modeled in a computer), we can, in all real-world examples of complexity, observe that the agents of the system are both responders to inputs from their environmental context, as well as shapers of that same environmental context. This means that the behaviors of all agents necessarily become entangled - entering into feedback loops with one another.

Adjusting rules to targets

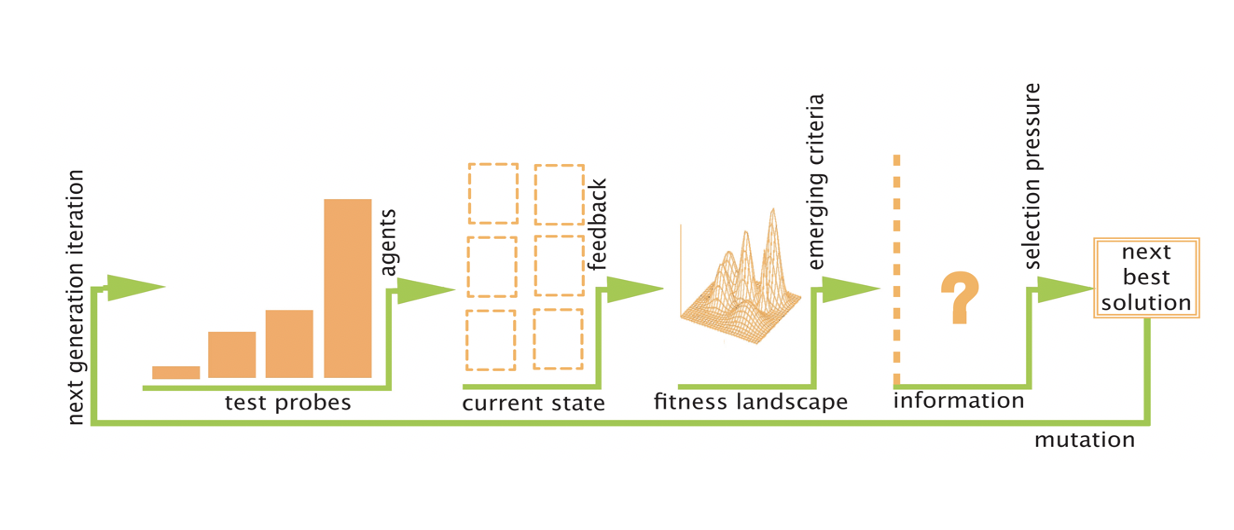

It is intriguing to observe that, simply by virtue of simple rule protocols that are pre-set by the programmer and played out over multiple iterations, complex emergent behavior can be produced. Here we observe the 'fact' of emergence from simple rules. But we can also imagine natural complex systems where agent rules also shift over time. While this could happen arbitrarily, it makes sense from an evolutionary perspective when some agent rules are more 'fit' then others. This results in a kind of selection pressure, determining which rule protocols are preserved and maintained. Here, the discovery of simple rule sets that yield better enacted results exemplifies the 'function' of emergence.

When we couple the notions of 'rules' with context, we are therefore stating that we are not interested in just any rule set that can generate emergent outcomes, but with specific rule sets that generate emergent outcomes that are in some way 'better' with respect to a given context. Successful rule systems imply a fit between the rules the agents are employing, and how well these rules assists agents (as a collective) in achieving a particular goal within a given setting.

As a general principle we can think of successful rules as ones that minimize agent effort (energy output) to resolve a given task. That said, in complex systems we need to go a step further to analyze the collective energy output. Thus in a system the best rules will be the ones that result in minimal energy output for the system as a whole to resolve a given task. This may require 'sacrifice' on the part of an individual agent, but this sacrifice (from a game theory perspective), is still worth it from the overall system level.

As agents in a complex system enact particular rule sets, rules might be revised based on how quickly or effectively they succeed at reaching a particular target.

When targets are achieved - 'food found!' - this information becomes a relevant system input. Agents that receive this input may have a rule that advises them to persist in the behavior that led to the input, whereas agents that fail to achieve this input may have a rule that demands they revise their rule set!

Agents are therefore not only be conditioned by a set of pre-established inputs and outputs but also be able to revise their rules. This requires them to gain feedback about the success of their rules and test modications. A way of thinking about this is captured in the idea of an agent held {{schemata}} about their behavior relative to their context that can be updated over time so as to better align. Further, if multiple agents test different rule regimes simultaneously, then there may be other 'rules' that help agents learn from one another. If a particular rule leads agents to food, on average, in ten steps, and another rule, on average, leads agents to food in 6 steps, then agents adopting the second rule should have the capacity to disseminate their rule set to other agents, eventually suppressing the first, weaker rule. This processor dissemination requires some form of communication or steering, which is often done via the use of Stigmergy.

Enacted 'rules' are therefore provisional tests of how well an output protocol achieves a given goal. The test results then become another form of input:

bad test results also become an agent input, telling the agent to: "generate a rule mutation as part of your next enacted output".

Novel Rule formation:

Rules might be altered in different ways. At the level of the individual -

- an agent might choose to revise how it values or factors inputs in a new way;

- an agent might choose to revise the nature of its outputs in a new way.

In the first instance, the impact or value assigned to particular inputs (needed to trigger an output) might change based on how successfully previous input weighting strategies were in relationship to reaching a target goal. In order for this to occur, the agent must have the capacity to assign new 'weights' (the value or significance) for an input, in different ways.

In the second instance, the agent requires enough inherent flexibility or 'Degrees of Freedom' to generate more than one kind of output. For example, if an agent can only be in one of two states, it has very little ability to realign outputs. But if an agent has the capacity to deploy itself in multiple ways, then there is more flexibility in the character rules it can formulate. This ties back to the idea of {{adaptive-capacity}}.

Rules might also be revised through processes occurring at the group level. Here, even if agents are unable to alter their performance at the individual level, there may still be mechanisms operating at the level of the group which result in better rules propagating. In this case, we would have a population of agents, each with specific rule sets that vary amongst them. Even if each individual agent has no ability to revise their particular rules, at the level of the population -

- poor rules result in agent death - there is no internal recalibration - but agents with bad rules simply cease to exist;

- 'good' rules can be reproduced - there is no internal recalibration - but agents with good rules persist and reproduce.

We can imagine that the two means of rule revision - those working at the individual level and those at the population level - might work in tandem. While all of this should not seem new (it is analogous to General Darwinism), since complex systems are not always biological ones, it can be helpful to consider how the processes of system adaptation (evolution) can instead be thought of as a process of rule revision.

Through agent to agent interaction, over multiple iterations, weaker protocols are filtered out, and stronger protocols are maintained and grow. That said, the ways in which rules are revised is not entirely predictable - there are many ways in which rules might be revised, and more than one kind of revision may prove successful (as the saying goes - there is more than one way to skin a cat). Accordingly, the trajectory of these systems is always contingent and subject to historical conditions.

Fixed Rules with thresholds of enactment

Not all complex adaptive behaviors require that rules be revised. We began with artificial systems - cellular automata - where the agent rules are fixed but we still see complex behaviors. There are also example of natural complex systems where rules are fixed, but still drive complex behaviors. These rules, rather than being the result of a computer programmer arbitrarily determining an input/output protocol, are the result of fundamental laws (or rules) of physics or chemistry.

One particularly beautiful example of non-programmed natural rules resulting in complex behaviors is the Belousov-Zhabotinsky (BZ) chemical oscillator. Here, fixed chemical interaction rules lead to complex form generation:

BZ chemical oscillator

In this particular reaction, as in other chemical oscillators, there are two interacting chemicals, or two 'agent populations' which react in ways that are auto-catalytic. The output generated by the production of one of the chemicals, becomes the input needed for the generation of the other chemical. Each chemical is associated with a particular color, which appears only when that chemical present in sufficient concentrations. The concentrations of these chemicals augments and diminishes at different reaction speeds, leading to shifting concentrations of the coupled pair. As concentrations rise and fall, we see emergent and oscillating color arrays.

Back to Core Concepts

Back to Navigating Complexity

Cite this page:

Wohl, S. (2022, 30 May). Rules. Retrieved from https://kapalicarsi.wittmeyer.io/definition/rule-based

Rules was updated May 30th, 2022.

Nothing over here yet

This is the feed, a series of related links and resources. Add a link to the feed →

Nothing in the feed...yet.

This is a list of People that Rules is related to.

Segregation model

Economist who developed one of the first cellular automata demonstrations: showing how segregation of agents will emerge as a phenomena due to simple rules that, in and of themselves, do not appear to be strongly linked to segregation outcomes.

Learn more →Urban Computational Modeling

Mike Batty is one of the key contributors to modeling cities as Complex Adaptive Systems

Learn more →

Cellular Automata | Sugarscape

This is a default subtitle for this page. Learn more →Cellular Automata/Game Theory

This is a default subtitle for this page. Learn more →Game of Life

This is a default subtitle for this page. Learn more →Cellular Automata

Chris Langton is a research and computer scientist. His research interests include artificial life, complex adaptive systems, distributed dynamical systems, multi-agent systems, simulation technology, and the role of information in physics.

Learn more →Reaction/Diffusion | Computation

diffusion model spots Learn more →This is a list of Terms that Rules is related to.

Related to the idea of Iterations that accumulate over time

More to come! Learn more →Agents within a Complex system can help one another achieve more 'fit' behaviors by providing signals of past success: this 'marking' of past work is known as 'Stigmergy'.

More coming soon!

Learn more →CAS Systems develop order or pattern ‘for free’: this means that order arises as a result of independent agent behaviors, without need for other inputs.

Text in progress Learn more →Agents in a Complex System are guided by neighboring agents - nonetheless leading to global order.

More coming soon!

Learn more →Agents in the CAS constantly adjust their possible behaviors to inputs - maintaining fitness over time.

CAS systems evolve over the course of time.

Learn more →

Building blocks form the foundation of larger scale patterns within Complex Systems.

The nature of a building block varies according to the system: it may take the form of an ant, a cell, a neuron or a building.

Learn more →This is a list of Urban Fields that Rules is related to.

Cellular Automata & Agent-Based Models offer city simulations whose behaviors we learn from. What are the strengths & weaknesses of this mode of engaging urban complexity?

There is a large body of research that employs computational techniques - in particular agent based modeling (ABM) and cellular automata (CA) to understand complex urban dynamics. This strategy looks at how rule based systems yield emergent structures.

Many cities around the world self-build without top-down control. What do these processes have in common with complexity?

Cities around the world are growing without the capacity for top-down control. Informal urbanism is an example of bottom-up processes that shape the city. Can these processes be harnessed in ways that make them more effective and productive?

New ways of modeling the physical shape of cities allows us to shape-shift at the touch of a keystroke. Can this ability to generate a multiplicity of possible future urbanities help make better cities?

This is a list of Key Concepts that Rules is related to.

'Degrees of freedom' is the way to describe the potential range of behaviors available within a given system. Without some freedom for a system to change its state, no complex adaptation can occur.

Understanding the degrees of freedom available within a complex system is important because it helps us understand the overall scope of potential ways in which a system can unfold. We can imagine that a given complex system is subject to a variety of inputs (many of which are unknown), but then we must ask, what is the system's range of possible outputs?

Learn more →Navigating Complexity © 2015-2025 Sharon Wohl, all rights reserved. Developed by Sean Wittmeyer

Sign In (SSO) | Sign In

Related (this page): Urban Modeling (11), Urban Informalities (16), Parametric Urbanism (10), Bottom-up Agents (22), Degrees of Freedom (78),

Section: concepts

Non-Linearity Related (same section): Tipping Points (218, concepts), Path Dependency (93, concepts), Far From Equilibrium (212, concepts), Related (all): Urban Modeling (11, fields), Resilient Urbanism (14, fields), Relational Geography (19, fields), Landscape Urbanism (15, fields), Evolutionary Geography (12, fields), Communicative Planning (18, fields), Assemblage Geography (20, fields),

Nested Orders Related (same section): Self-Organized Criticality (64, concepts), Scale-Free (217, concepts), Power Laws (66, concepts), Related (all): Urban Modeling (11, fields), Urban Informalities (16, fields), Resilient Urbanism (14, fields),

Emergence Related (same section): Self-Organization (214, concepts), Fitness (59, concepts), Attractor States (72, concepts), Related (all): Urban Modeling (11, fields), Urban Informalities (16, fields), Urban Datascapes (28, fields), Incremental Urbanism (13, fields), Evolutionary Geography (12, fields), Communicative Planning (18, fields), Assemblage Geography (20, fields),

Driving Flows Related (same section): Open / Dissipative (84, concepts), Networks (75, concepts), Information (73, concepts), Related (all): Urban Datascapes (28, fields), Tactical Urbanism (17, fields), Relational Geography (19, fields), Parametric Urbanism (10, fields), Landscape Urbanism (15, fields), Evolutionary Geography (12, fields), Communicative Planning (18, fields), Assemblage Geography (20, fields),

Bottom-up Agents Related (same section): Rules (213, concepts), Iterations (56, concepts), Related (all): Urban Modeling (11, fields), Urban Informalities (16, fields), Resilient Urbanism (14, fields), Parametric Urbanism (10, fields), Incremental Urbanism (13, fields), Evolutionary Geography (12, fields), Communicative Planning (18, fields),

Adaptive Capacity Related (same section): Feedback (88, concepts), Degrees of Freedom (78, concepts), Related (all): Urban Modeling (11, fields), Urban Informalities (16, fields), Tactical Urbanism (17, fields), Parametric Urbanism (10, fields), Landscape Urbanism (15, fields), Incremental Urbanism (13, fields), Evolutionary Geography (12, fields),